I have now covered all of the ground I wished to cover regarding the construction of rate stats for various frameworks for evaluating individual offense, so I think this would be a good point to take stock of the conclusions I have drawn. From here on out, I will write about these tenets as if they are inviolable, which is not actually the case (“sound” and “well-reasoned to me” are as far as I’m willing to go), but I’m moving on to other topics:

* For team offense, Runs/Out is the only proper rate stat

* If evaluating individuals as teams (i.e. applying a dynamic run estimator like Runs Created or Base Runs directly to an individual’s statistics), Runs/Out is also the proper rate stat. Any other choice breaks consistency with treating the individual as a team, and consistency is the only thing going for such an approach. On a related note, don’t treat individuals as teams.

* If using a linear weights framework, the proper rate stat is RAA/PA, R+/PA, or restatement of those (wOBA is the one commonly used). These metrics all produce consistent rank orders and can be easily restated from one another. Any of them are valid choices at the user’s discretion, with the only objective distinction being whether you want ratio and differential comparability (R+/PA), only care about differential comparability (R+/PA or RAA/PA), or would prefer a different scale altogether at the sacrifice of direct comparisons without futher modifications (wOBA).

* If using a theoretical team framework, R+/O+ and R+/PA+ are equivalent, with the user needing to decide whether they want to think about individual performance in terms of R/O or R/PA.

Throughout this series, I have not worried about context, and originally envisioned a final installment that would briefly discuss some of the issues with comparing player’s rates across league-seasons. In putting it together, I decided that it will take a few installments to do this properly. It will also take another league-season to pair with the 1994 AL in order to make cross-context comparisons.

I have chosen the 1966 AL to fill this role. In the expansion era (1961 – 2019), the AL has averaged 4.52 R/G. 1994 was third-highest at 5.23, 15.6% higher than average. The closest league to being an inverse relative to the average is the 1966 AL (3.89 R/G, 13.8% lower than average). The obvious choice would have been 1968, since it was the most extreme, but as 1994 was not the most extreme I thought a league that was similarly low scoring would be more appropriate.

The other reason I like the 1966 AL for his purpose is that, just as was the case in 1994, the superior offensive player in the league was a future Hall of Fame slugger named Frank. In making comparisons across the twenty-eight year and (more importantly) 1.34 R/G differences between these two league-seasons, the two Franks will serve as our primary reference points.

To look at the 1966 AL we will first need to define our runs created formulas. I will not repeat the explanations of how these are calculated – I used the exact same approach as for the 1994 AL, which are demonstrated primarily in the parts 1, 9, and 10.

Base Runs:

A = H + W – HR = S + D + T + W

B = (2TB - H – 4HR + .05W)*.79920 = .7992S + 2.3976D + 3.996T + 2.3976HR + .0400W

C = AB – H = Outs

D = HR

BsR = (A*B)/(B + C) + D

Linear Weights:

LW_RC = .4560S + .7751D + 1.0942T + 1.4787HR + .3044W - .0841(outs)

LW_RAA = .4560S + .7751D + 1.0942T + 1.4787HR + .3044W - .2369(outs)

wOBA = (.8888S + 1.2981D + 1.7075T + 2.2006HR + .6944W)/PA

Theoretical Team:

TT_BsR = (A + 2.246PA)*(B + 2.346PA)/(B + C + 7.915PA) + D - .6658PA

TT_BsRP = ((A + 2.246PA)*(B + 2.346PA)/(B + C + 7.915PA) + D + .185PA)*PAR – .8519PA

TT_RAA = ((A + 2.246PA)*(B + 2.346PA)/(B + C + 7.915PA) + D + .185PA)*PAR – .9572PA

Using these equations, let’s look at the leaderboards for the key stats for each framework. First, for the player as a team:

Of course, we’ll get slightly different results from the different frameworks, but two things should be obvious: Robinson was the best offensive player in the league (his lead over Mantle in second place is bigger than the gap from Mantle to ninth-place Curt Blefary, and he was also among the leaders in PA), and the difference in league offensive levels carried through to individuals.

Next for the linear weights framework:

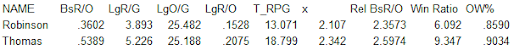

And finally for the theoretical team framework:

Now comes the hard part – how do we compare these performances from the 1966 AL against those from the 1994 AL? It should be implied, but in all comparisons that follow, I am only concerned about the value of a given player’s contributions relative to the context in which he played; this is not about whether/to what extent the overall quality of play differed between 1966 and 1994, or about how the existence of pitcher hitting in 1966 but not in 1994 impacted the league average, etc. I also am not going all the way in accounting for context, as I’ve still done nothing to park-adjust. Park adjustments are important, but whether you adjust or not is irrelevant to the question of which rate stat you should use. I’m also not interested in how “impressive” a given rate is due to the variation between players (e.g. z-scores) – I’m simply trying to quantify the win value of the runs a player has contributed.

The obvious first step to compare players across two league-seasons is to compare the difference or ratio of their rate to the league rate. As we’ve discussed throughout this series, some stats can be compared using both differences and ratios, but others can only be compared (at least meaningfully) with one or the others, and others still can be compared but the ratio or difference no longer has any baseball meaning unless some transformation is carried out (wOBA is the most prominent example we’ve touched on).

The table below shows the rates for our two Franks, the respective league rate, and the difference and ratio (if applicable) for each of the key rate stats we’ve looked at:

We’ve expressed each player’s rate relative to the league average, but we haven’t answered the question of which construct is appropriate – the difference or the ratio, or does the answer differ depending on the metric? And how can we make that determination? In lieu of some guiding principle, it is just a matter of personal preference. Most people are drawn to ratios, and the widespread adoption of metrics like ERA+, ERA-, OPS+, and wRC+ speak to that preference. This case illustrates one of the reasons why ratios make sense intuitively – while the Franks are very close when comparing ratios, Thomas has a healthy lead when comparing differences as the league average R/PA and R/O for 1994 are much higher than the same for 1966. It seems intuitive that a batter will be able to exceed the league average by a larger difference when it is .2419 rather than .1528.

Still, in order to really understand how to compare players across contexts, and ensure that our intuition is grounded in reality, we need to think about how runs relate to wins. All of the metrics in that table which I consider the key metrics discussed in this series have been expressed in runs, and this is a natural starting point as the goal of an offense is to score runs. Of course the ultimate reason why an offense wants to score runs is so that they might contribute to wins.

I will break one of the rules I’ve tried to adhere to by begging the question and asserting that Pythagenpat and associated constructs are the correct way to convert runs to wins. Of course it is just a model, and while it is a good one, it is not perfect, but I think it is the best choice given its accuracy and its seemingly reasonable results for extreme situations.

Using Pythagorean also lends itself to adoption of a ratio rate stat, as one way to express the Pythagorean Theorem is that the expected ratio of wins to losses is equal to the ratio of runs to runs allowed raised to some power. If we start by thinking about the team/player as team framework for evaluating offense, it is natural to construct a win-unit rate stat by treating the team’s R/O as the numerator and the league average as the denominator. The ratio of these two, raised to some power, is then the equivalent win ratio that results. This is exactly the approach that Bill James took in his early Baseball Abstracts.

We can easily use this relationship to demonstrate that a simple difference of R/O does not capture the differences in win values between players. If one player exceeds the league average R/O by 10% and another 50%, then assuming a Pythagorean exponent of 2, player A will have a “win ratio” of 1.21 and player B will have a win ratio of 2.25. Even if we convert those to winning percentages (.5475 and .6923), the difference between the two player’s R/O does not capture the win value difference.

The ratio doesn’t either, of course, since it would need to be squared, but if we assume a constant Pythagorean exponent like 2, this is simply a matter of scaling, with no impact on the rank order to players. However, if we use a custom Pythagorean exponent, this assumption breaks down, as we can illustrate by comparing the two Franks. Since we’re starting with the assumption that Pythagenpat is correct, this means that we will always need to make some kind of adjustment in order to convert our run rate into an equivalent win rate.

Since we are treating the players as teams, the consistent approach is to first calculate the RPG for each player as a team, then calculate the Pythagenpat exponent for the situation, then convert their relative BsR/O to an equivalent win value. The RPG for the 1966 AL was 3.893 with 25.482 O/G, while in the 1994 AL it was 5.226 with 25.188 O/G.

T_RPG = LgR/G + BsR/O*LgO/G

x = T_RPG^.29 (for a Pythagenpat z value of .29)

Relative BsR/O = (BsR/O)/(LgBsR/O)

Win Ratio = (Relative BsR/O)^x

Offensive Winning Percentage = Win Ratio/(Win Ratio + 1) = (BsR/O)^x/((BsR/O)^x + (LgBsR/O)^x)

Here, we conclude that just looking at Relative BsR/O understates Thomas’ superiority, as Pythagenpat estimates more wins for a given run ratio when the RPG increases (e.g. a run ratio of 8/4 will produce more wins than a run ratio of 7/3.5).

If we were actually going to use this framework, we could leave our final relative rate stat in the form of a win ratio or OW%, but I would prefer to convert it back to a run rate. Even within the conceit of the player as a team framework, it’s hard to know what to do with an OW% (or the even less familiar win ratio). We can say that Thomas’ OW% of .903 means that a team that hit like Thomas and had league average defense (runs allowed) would be expected to have a .903 W%, but even if you follow the player as team framework, you would probably like to have a result that can be more easily tied back to the player’s performance as the member of a team, not what record the Yuma Mutant Clone Franks would have. One way to return the win ratio to a more familiar scale is to convert it back to a run ratio.

Let’s define “r” to be the Pythagenpat exponent for some reference context, which I will define to be 8.83 RPG – the major league average for the expansion era (1961 – 2019). We can then easily convert our estimated win ratios for the Franks to run ratios that would produce the same estimated win ratio in the reference (8.83 RPG) environment.

The calculation is simply (Win Ratio)^(1/r). Since r = 8.83^.29 = 1.881, this becomes Win Ratio^.5316, which produces 2.614 for Robinson and 3.282 for Thomas. Following the time-honored custom of dropping the decimal place, we wind up with a win-equivalent relative BsR/O of 261 for Robinson and 328 for Thomas, compared to their initial values of 217 and 260 respectively. These both increased because both players would radically alter their run environments, increasing the win value of their relative BsR/O. I would demonstrate the lesser impact on more typical performers if I cared about this framework beyond being thorough.

While I do think that this example demonstrates that just looking at a ratio of R/O does not appropriately capture the win impact between players, the individual OW% approach goes many steps too far, but it is the logical conclusion of the player as a team methodology. If you were on that train until we got to the end of the line, I’d encourage you to consider jumping off at one of the earlier stations (I’d prefer you get on the green or red linear weights or theoretical team lines rather than ever embarking on the player as team blue line). Next time, I’ll explore those paths to win-unit rate stats using those frameworks.

No comments:

Post a Comment

I reserve the right to reject any comment for any reason.