It’s understandable that the editing process for Baseball Prospectus 2021 overlooked something trivial like explaining what a metric in the team prospectus box means. After all, it must have been exhausting work to ensure that each of the many political non-sequiturs in the book were on message (Status: success! You can give this book to your children to read with confidence that they are in a safe space, with no deviation from the blessed orthodoxy). The vital imperative of ideological conformity handled, they would have needed next to run a fine-tooth comb over any reference to the aesthetics of present day MLB on-field play to ensure the proper level of smug conflation of one’s own preferences with the perfect ideal. Another success. Finally, they could turn their attention to making sure there were the requisite number of sneering statements about the fact that there even was a MLB season in 2020. As always, left unaddressed was how a publication that exists (in theory at least – reading the 2021 annual, this may be a fatally flawed assumption on my part) to analyze professional baseball could continue to exist if professional baseball ceased to exist, but who knows? When you tow the line so perfectly, maybe you can figure out a way to get in some of that sweet $1.9 trillion.

So it is entirely understandable that such a triviality as a publication rooted in statistical analysis could completely overlook explaining a metric that none of its writers ever bother to refer to anyway. The metric in question is called “dWin%”. It didn’t replace any team metric that was listed in the 2020 edition – it literally fills in a blank space in the right data column. A search of the term “dWin%” and “Deserved Winning Percentage” on the BP website doesn’t yield any obvious (non-paywalled, at least) relevant hits. So the best I can do is make an educated guess about what this metric is.

I gave away my guess by searching for “Deserved Winning Percentage”. BP has adopted a family of metrics with the “Deserved” prefix which utilize Jonathan Judge’s mixed model methodology to adjust for all manner of effects (going well beyond the staples of traditional sabermetrics like league run environment and park). The team prospectus box lists “DRC+” and “DRA-“, which are the DRC metric for hitters and DRA for pitchers indexed to the league average. So it’s only natural to assume that dWin% is some type of combination of these two to yield a team’s “deserved” winning percentage.

It’s also natural to assume that there would be a relationship between DRC+, DRA-, and dWin%. If the first two are in essence run ratios (with myriad adjustments, of course, but essentially an estimate of percentage difference between a team’s deserved rate of runs scored or allowed and the league average), then it’s only natural to assume that there would be some close relationship between them and dWin%. If we were in the realm of actual runs scored and allowed, or runs created/runs created allowed, we could confidently state that one powerful way to state the relationship would be a Pythagorean approach. Namely, the square of the ratio of DRC+ to DRA- should be close to the ratio of dWin% to its complement.

There are two obvious caveats to throw on this conclusion:

1) While the statistical introduction does not specifically refer to DRA- (it refers just to DRA, which was listed for teams rather than DRA- in the 2020 edition), it’s reasonable to assume that DRA- is the indexed version of DRA. DRA is a pitching metric, which would attempt to state a pitcher’s deserved runs allowed after removing the impact of the defense that supports him. This means that comparing the ratio of DRC+ and DRA- on the team level is likely ignoring fielding, and thus the relationship I’ve posited above would be incomplete. I would be remiss in saying that this is not the fault of BP, except to the extent that we are left to speculate about the meaning of these metrics, as there's certainly nothing wrong with having a measure that attempts to isolate the performance of a team's pitching staff.

2) It is possible that there is something else going on besides fielding in the process of developing the Deserved family of metrics that would invalidate this manner of combining the offensive and pitching components. Without being privy to the full nature of the adjustments made in these metrics, it’s hard to speculate on what if anything that might be, but I would be remiss in not raising the possibility that there’s something going on behind the curtain or that I have simply overlooked.

I’m not going to run a chart of all of the team values, because that would be infringing on BP’s property rights, and given the first paragraph of this post that would be practically unwise even if it were not morally objectionable. A few summary points provide defensible ground:

1) the average of the team DRC+s listed in the annual is 99.3 and the average of DRA-s is 99.5. Given that the figures are rounded to the nearest whole number (e.g. 99 = 99%), this is encouraging as we would expect the league average to be 100.

2) the average of the team dWin%s is .464. Less encouraging.

As I was reading through the book, there were two team figures that really

caught my eye and led me to this more formal examination. The first was

The Deserved family of metrics have always produced some eyebrow-raising results, which are difficult to evaluate objectively given the somewhat black box nature of the metrics and the complexity of the mathematical approach involved (I will be the first to admit that “mixed models” of the kind described are beyond my own mathematical toolkit). So it’s dangerous to focus too much on any particular result, as it may just be a vehicle by which to expose one’s own ignorance. As a second-generation sabermetrician, this is a particular nightmare, becoming the sportswriter you laughed at as a twelve-year old for dismissing RC/27 as impossibly complex and unintelligible.

Still, it is quite remarkable that the team which allowed

the second-most park-adjusted runs per inning in the majors might actually have turned in the second-best performance. In fairness, it was a sixty-game season, so the

deviation between underlying quality of performance and actual outcome could be

enormous, and the East could have been the toughest of the three sub-leagues,

especially in terms of balance as the Dodgers tip the scales West. Most

significantly, it is just a pitching metric, and the Phillies defense was

dreadful at turning balls in play into outs – they were last in the majors in

DER at .619.

However, every factor that would explain how their pitching was actually second-best does nothing to explain how their overall deserved team performance was also second-best. Adjusting away terrible defensive support doesn’t mean that the team’s poor runs allowed weren’t deserved, it just means that the blame should be pinned on the fielders and not the pitchers. Again, it’s hard to pinpoint any exact criticism given the nature of the metrics, but this one is tough to accept at face value.

It also seems that if one had conviction in the result, it would show up in the narrative somewhere. There’s always been a disconnect between what BP statistics say and what their authors write, which owes partly to the ensemble approach to writing and presumably partly to the timing (the authors of team chapters probably start very soon after the season and without the benefit of the full spread of data that will appear in the book). Still, it seems as if this disconnect has increased with the advent of the deserved metrics, which often tell a very different story than even the mainstream traditional sabermetric tools (e.g. an EqA or a FIP, to refer to metrics previously embraced by BP). But I can assure you that if I believed the Phillies underlying performance as a team was actually second only to the Dodgers, I’d work that into any retrospective of their 2020 performance and forecast of their 2021.

The second team that caught my eye was the A’s, who posted a 103 DRC+, 98 DRA-, and .499 dWin%. The obvious disconnect between an above-average offense, above-average pitching, but sub-.500 deserved W% could be explained by defense. What can’t be explained is how a .499 dWin% ranks ninth in the majors, at least until you line up the thirty teams and see that the average is .464. While we can charitably assume that a combination of our own ignorance and the proprietary nature of the calculations can explain many odd results from the deserved stats, I don’t know what can satisfactorily explain a W% metric that averages to .464 for the whole league.

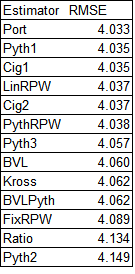

The hope is that this simply some scalar error, a fudge factor not applied somewhere. There is some evidence that this is the case – if you take the ratio of DRC+ to DRC- and plot against the ratio of dWin% to (1 – dWin%), you get a correlation of +0.974 and a pretty straight line, as you would expect given what should be in the vicinity of a Pythagorean relationship. It might even work out as you’d expect if dWin% is baking in fielding.

Still, it’s disappointing that the question has to be asked.