This methodology is really unnecessary, and very possibly has a flaw somewhere inside that I was unable to spot. However, one night in September the Rays/Yankees game I was watching went into a rain delay, and not wanting to do some real work I used this time to fiddle around with a rating system for teams that incorporated strength of schedule. Never one to simply let an idle experiment rest in peace without milking a couple of blogposts out of it, I am compelled to describe the Crude Team Rating (CTR) here.

There is nothing novel about the idea--similar ratings which are likely based on better theory are published by Baseball Prospectus, Beyond the Box Score, Andy Dolphin, Baseball-Reference, and others. The concept is simple; the execution in this case is likely muddled.

Let me offer an example of how this works with a hypothetical four-team league composed of the Alphas, Bravos, Charlies, and Ekos. The 90 game schedule is not balanced; the Alphas/Bravos and Charlies/Ekos are in "divisions" and play each other 40 times, playing their cross-divisional foes 25 times each. The Alphas go 25-15 against the Bravos and 17-8 against the Charlies and Ekos; the Bravos go 14-11 against the Charlies and Ekos; the Charlies go 14-6 against the Ekos. We wind up with these standings:

The Bravos and Charlies are equal in the standings, but we have every reason to believe that the Bravos are a better team--they won their season series against both teams from the other division, and had to play forty games against the Alphas, who are clearly the league's dominant team. Obviously strength of schedule worked against the Bravos.

Let's start by looking at each team's win ratio (W/L, which of course is also W%/(1 - W%)):

These do not average to 1, because of the nature of working with ratios rather than percentages (the average W% is .500 in this league--don't worry, I made sure my standings added up). The average win ratio for the league will always be greater than one, unless all of the teams are .500. What we can do now is calculate the average win ratio for each team's opponents. The Alphas' opponents had an average win ratio of (40*.915 + 25*.915 + 25*.636)/90 = .838. This is the initial strength of schedule, S1.

Next, we can adjust each team's win ratio for their strength of schedule. First, though, I need to point out a flaw that is inherent in the CTR approach--it does not recognize the degree to which a team's SOS is affected by their quality. The Alphas will take a hit on this count, because all of the teams they play against have poor records. Of course, based on what we know, the Alphas actually play the second-toughest schedule in the league because of the forty games with the Bravos. To the algorithm used here, they have a weak schedule because all the teams they play have losing records. That is true, but the reason the Bravos have a losing record is because they play the Alphas so often.

I could attempt to adjust for this in some way, but I've chosen to let it slide--that's one of many reasons why these are self-proclaimed crude ratings. Of course, the effect is much more pronounced in this hypothetical when teams are playing 44% of their games against the same opponent, and is much less pronounced in a 30 team league in which the most frequent opponents play only 12% of the time.

Once we have the initial strength of schedule S1, we need to find avg(S1)--the average S1 for all teams in the league. In this example it is 1.092. To adjust each team's W0 for SOS, we multiply by the ratio of S1 to avg(S1). This gives us the first-iteration adjusted win ration, which I'll call W1:

W1 = W0*(S1/Avg(S1))

For the Alphas: W1 = 1.903*.838/1.092 = 1.459

Now that we have an adjusted win ratio, we can re-estimate SOS in the same manner as before, producing S2. This is necessary because we now have a better estimate of the quality of each team, and that knowledge should be reflected in the SOS estimate. S2 for the Alphas is .916.

In order to find W2, we need to compare S2 to S1. We can't simply apply S2 to W1, because W1 already includes an adjustment for SOS. S2 supercedes S1; it doesn't act upon it as a multiplier or addition. We also can't apply S2 to W0, the initial win ratio, because the schedule adjustment of S2 is based on each team's W1. At each step in the process, the previous iteration has to be seen as the new starting point, and the adjustment has to be a comparison between the new iteration and the one that directly preceded it.

So W2 is figured in the same manner as W1, except the ratio of S2 to the average is compared to the same for S1:

W2 = W1*(S2/Avg(S2))/(S1/Avg(S1))

For the Alphas, W2 = 1.459*(.916/1.029)/(.838/1.092) = 1.692

Then we use W2 to figure S3, and use S3 to figure W3, and the process continues through as many iterations as you feel like setting up in Excel (at least for me). For the purpose of this example, I did nine; for the actual spreadsheet for ML teams, I went a little overboard and did thirty iterations. That results in a "final" estimate of win ratio, W9. It is not of course truly final as that would be W(infinity). The "final" estimate of SOS is S10, so that the last estimate of SOS is based on the last estimate of team quality.

One undesirable effect of the iterative process is that the average W9 is no longer equal to the actual average win ratio for the league, and the distribution is not the same. Thus, when the adjusted win ratios are converted into winning percentages (W% = WR/(1 + WR)), they are not guaranteed to average to .500, which of course is a logical must.

In order to convert W9 into an adjusted winning percentage, first figure an initial W% for each team:

iW% = W9/(1 + W9)

Dividing by the average of iW% will force the new adjusted W% to average to .500 for the league:

aW% = iW%/Avg(iW%)*.5

The results for the theoretical league are illustrative of the strength of schedule problem I touched on earlier--the Alphas' aW% is lower than their actual W%, as they did not have to play against themselves. It really doesn't make sense to consider what a team's record would be if they played themselves, but thankfully this distortion becomes more trivial as the number of teams in a league increases.

aW% might seem like a logical measure to use as the final outcome of the system, but I actually prefer a scale which remains grounded in win ratio. So I convert W9 into the final product, Crude Team Rating, by dividing W9 by the average W9. This is different from aW%, which forces the average to equal the initial average win ratio for the league; this adjustment forces the average to 1 (or 100 when the decimal point is dropped), which is a nice property to have for the overall rating:

CTR = W9/Avg(W9)

In the same manner, a final strength of schedule metric that is centered at 1 is:

SOS = S10/Avg(S10)

The CTR and SOS for the four hypothetical teams are:

This post is already a mess to read, so at this point I'm going to break it up into sections on particular aspects of the methodology that I wish to expound upon:

Why is win ratio used throughout the process rather than W%?

I use win ratio because it is much easier to work with; for instance, the ratios between the various SOS estimates can be multiplied by the win ratio to find an adjusted win ratio. The math would not be anywhere near as straightforward if W% were used. Using win ratio also allows for a larger spread in the final CTRs; I could use CTR = aW%/Avg(aW%), but the range would be narrower.

Most importantly, though, is that win ratios can be plugged directly into Odds Ratio (equivalent to Log5) to estimate W% for matchups between teams. If a team with a win ratio of 1.2 plays a team with a win ratio of .9, they can be expected to win 57.1% of the time--1.2/(1.2 + .9). There would be equivalent but messier math if working with W%.

Due to this property, we can use CTR to estimate head-to-head winning percentages. CTR is not a true win ratio, since it has been re-centered at 100, but the re-centering is done with a common scalar and so it has no effect on ratios of team CTRs. So a team with a CTR of 130 can be expected to win 52% of the time against a team with a 120 CTR--130/(130 + 120).

I estimated W% for the Alphas against each of their opponents using CTR. The fact that they are close to the actual results should not be taken as any type of indication that the head-to-head estimates are accurate, as I obviously came up with the numbers to follow logically. They are offered just to show how the ratings can be used to estimate W% in a head-to-head matchup:

Your ranking system is novel and unique, right?

Wrong. There's nothing new about it. It is really quite similar to the Simple Ranking System used by the Sports-Reference sites, except it operates on ratios rather than differentials. As mentioned above, there are a number of similar and likely more refined approaches utilized by other analysts.

It's really quite simple:

1. Assign each team an initial ranking

2. Use those initial rankings to estimate SOS for each team

3. Compare SOS to the average schedule and adjust initial ranking accordingly

4. Repeat until rankings stabilize

You haven't adjusted for home-field advantage, have you?

No, I haven't, either in the process of estimating team strength (I don't breakdown a team's schedule into home and road games) or by producing adjustments for CTR at home and on the road (i.e. having a formula that tells you that a 130 overall CTR team has an equivalent 110 CTR on the road and 150 at home). The former falls outside the scope of an admittedly crude rating system; the latter is something that is easy enough to account for on the fly.

While there is a lot that could be said about incorporating home field advantage (writing about some of it is on my to-do list), the simplest thing to do is to incorporate a home field edge into the odds ratio calculation. The long-term major league average is for the home team to win 54% of the time, which is a win ratio of 1.174. I call the square root of that win ratio "h" (1.083). If a team is away, divide their CTR by h; if they are home, multiply it by h.

Suppose that we have a 125 CTR team hosting a 110 CTR team. In lieu of home field advantage, we'd expect the home team to win 125/(125 + 110) = 53.2% of the time. But the 125 team is now an effective 135.4 team (125*1.083), and the 110 team is now an effective 101.6 team (110/1.083), and so the expected outcome is now a home win 57.1% of the time.

Equivalently, one can figure the odds ratio probability by first dividing the two CTRs (125/110 = 1.136), and dividing by that ratio plus one (1.136/2.136 = 53.2%). If you approach it in this manner, you need to multiply by the ratio by h^2 (1.174) rather than h (125/110*1.174 = 1.334 and 1.334/2.334 = 57.1%). I prefer the former method, because it produces a distinct new rating for each team based on whether they are home or away rather than accounting for it all in one step, but it is a matter of preference and has no computational impact.

CTR is based on actual win ratio. Why don't you use expected win ratio from runs and runs allowed or runs created and runs created allowed?

One can very easily substitute expected win ratio for actual win ratio. I have two variations, eCTR, gCTR, and pCTR. eCTR uses win ratio estimated from actual runs scored and allowed, while gCTR uses "Game EW%" (based on runs scored and allowed distribution taken independently in this post) and pCTR uses win ratio estimated from runs created and runs created allowed.

The discussion of what inputs to use helps to illustrate another flaw in the methodology--there is no regression built in to the system. For the purpose of ranking a team's actual W-L results, there is no need for regression, but if one is using the system to estimate a team's W% against an opponent, it is incorrect to assume that a team's sample W% is equal to the true probability of them winning a game. Even if one did not want to regress the W/L ratio of the team being rated, it would make sense to regress the records of their opponents in figuring strength of schedule. I've done neither.

Are there any other potential applications of CTR?

One can always think up different ways to use a method like this (and since methods similar in spirit but superior in execution to CTR already exist many of them have been implemented already); the question is whether the results are robust enough to provide value in a given function. I'll offer one possible use here, which is using a team's strength of schedule adjustment to estimate an adjustment factor for their players' performance.

There are some perfectly good reasons why one would not want to adjust individual performance for the strength of his opponents, but if that is something in which you are interested, CTR might be useful. If a team's opponents have an expected win ratio of .9, then based on the Pythagorean formula with an exponent of two, their equivalent run ratio should be sqrt(.9) = .949. Custom exponents could be used as well, of course, but two will suffice for this example.

So a pitcher on a team with a .9 SOS could have his ERA adjusted by dividing by .949 to account for the weaker quality of opposition. This approach assumes that the team's opponents are evenly balanced between offense and defense. One could put together a CTR-like system that broke down runs scored and allowed separately, but that would require the use of park factors or home/road breakdowns, and would greatly complicate matters.

Speaking of park factors, one could use an iterative approach to calculate park factors (Pete Palmer's PF method takes this path). Instead of simply comparing a team's home and road RPG as I do, you could look at the team's road games in each park, calculate an initial adjustment, and iterate until the final park factors stabilized. At some point I’ll cover this application in detail.

Wait, there's something messed up with the 100 scale. A .500 team will not get a 100 CTR.

You're right, they won't, but it's not a problem with the scale--it's a feature of it. (You are free to prefer a different scale, of course). This issue is hinted at in the section about why win ratio is used, but I didn't address it explicitly there. The scale is designed so that a team with an average win ratio gets a 100 rating, not so that an average team (which would by definition play .500) gets a 100 rating.

Others have pointed out the potential dangers in working with ratios rather than percentages--assuming that ratios work as percentages can result in mathematical blunders. Suppose we have two football teams that comprise a league, one which goes 1-15 and the other that goes 15-1. Obviously, the average record for a team in this league is 8-8 with a winning percentage of .500. Such a team would have a win ratio of 1.

But what is the average win ratio for a team in this league? Not the win ratio for a hypothetical average team--the arithmetic mean of the win ratios of the teams in this league. It is (15/1 + 1/15)/2 = 7.533. It's not even close to 1.

Obviously this is an extreme example, but the principle holds--teams that are an equal distance from .500 will not see their win ratios balance to 1. The average win ratio for a real league will always be >= 1. The effect is stronger in leagues in which win ratios deviate more from 1. In the 2009 majors, for instance, the average win ratio was 1.039. In the 2009 NFL, it was 1.386.

I could have set up the ratings so that a team with a .500 record (i.e. 1 win ratio) was assured of receiving a ranking of 100 by simply not dividing what is called W(f) below by Avg[W(f)], but it also would have ensured that the average of all of the team ratings would not be 100. In the first spreadsheet I put together, that's exactly what I did, but I decided that it was more annoying to have to remember what the league average of the ratings was (especially when looking at aggregate rankings for divisions and leagues) than it was to remember that a .500 team would have a ranking of 96 or so. It's purely a matter of aesthetics and personal preference.

Generic Formulas

W0 = W/L for aCTR; (gEW%/(1 - gEW%) for gCTR; (R/RA)^x for eCTR; (RC/RCA)^y for pCTR

where x = ((R + RA)/G)^z and y = ((RC + RCA)/G)^z, where z is the Pythagenpat exponent (I use .29 out of habit)

for a league of t teams, where G is total games played for a team and g(i) is the number of games against a particular opponent:

S1 = (1/G)*{SUM(i = 0 to t)[g(i)*W0(i)]}

W1 = W0*(S1/Avg(S1))

S(n) for n > 1 = (1/G)*{SUM(i = 0 to t)[g(i)*W(n-1)(i)]

W(n) for n > 1 = W(n-1)*(S(n)/Avg(S(n))/(S(n-1)/Avg(S(n-1)))

For final win iteration (f) in the specific implementation (f = 30 in my spreadsheet):

S(f + 1) = (1/G)*{SUM(i = 0 to t)[g(i)*W(f)(i)]

iW% = W(f)/[1 + W(f)]

aW% = iW%/Avg(iW%)*.5

CTR = W(f)/Avg(W(f))

SOS = S(f + 1)/Avg(S(f + 1))

Monday, January 31, 2011

Crude Team Ratings

Saturday, January 29, 2011

Monday, January 24, 2011

Run Distribution and W%, 2010

A couple of caveats apply to everything that follows in this post. The first is that there are no park adjustments anywhere. There's obviously a difference between scoring 5 runs at Petco and scoring 5 runs at Coors, but if you're using discrete data there's not much that can be done about it unless you want to use a different distribution for every possible context. Similarly, it's necessary to acknowledge that games do not always consist of nine innings; again, it's tough to do anything about this while maintaining your sanity.

All of the conversions of runs to wins are based only on 2010 data. Ideally, I would use an appropriate distribution for runs per game based on average R/G, but I've taken the lazy way out and used the empirical data for 2010 only.

This post also contains little in the way of "analysis" and a lot of tables. This is probably a good thing for you as the reader, but I felt obliged to warn you anyway.

I've run a post like this in each of the last two years, and I always started with an examination of team record in one-run, blowout (5+ margin), and other (2-4 run margin) games. While I was aware of the havoc that bottom of the ninth/extra innings can unleash on one-run records, I have to admit that I was understating the impact, and thus giving a lot more attention to one-run records than they deserved. So this year I've made it simple with two classes: blowouts (5+ margin) and non-blowout (margin of 4 or less).

72.6% of games are non-blowouts; 27.4% are blowouts. Of course, "blowout" is not an appropriate description for many five run games, but I've got to call it something. Team records in non-blowouts:

The standard deviation of W% in non-blowouts is .050, compared to .068 for all games and .138 for blowouts. Blowout records:

The much wider range is easy to see. The next chart shows each team's percentage of non-blowouts and blowouts, as well as the difference in W% between the two classes (figured as blowout W% minus non-blowout W%):

Colorado had the fewest blowouts, with only 20%, which is a bit of an oddity as a strong hitter's park increases the likelihood of a blowout (obviously team quality is a strong factor as well). Milwaukee led the way with 36% blowouts, and while their 25 blowout wins tied for tenth, their 33 blowout losses trailed only the Pirates.

Anyone who reads this blog doesn't need the reminder, but a quick perusal of this list should remind you that the notion that good teams make their hay by winning close games is bunk. Good teams have better record in blowout games than non-blowout games as a rule. Even a team like San Francisco which did not have many blowouts (only 22%) had a strong record (22-13) when they did find themselves in such a contest.

Blowouts/non-blowouts is not a very granular way of looking at margin of victory data; they simply divide all victory margins into two classes. This chart shows the percentage of games decided by X runs, and the cumulative percentage of games decided by less than or equal to X runs:

The most common margin was one run, and each successive margin was less frequent than its predecessor (except for sixteen, and by that point so what?) About half of games were decided by two runs or less, and about 10% were by seven runs or more.

Team runs scored and allowed in wins and losses can serve as interesting tidbits, and as a reminder that there is nothing unusual about having a large split between performance in wins and performance in losses. First, here are team runs scored for wins and losses, along with the ratio of each to the team's overall scoring average:

The average team scored 6.06 in their wins, which is 139% of the overall average; in losses the average is 2.69 runs, or 62% of average.

Runs allowed in wins and losses:

Another application for the data is the average margin of victory for each team. The chart that follows lists each team's average run differential in wins (W RD); losses (L RD); and the weighted average of the two (absolute value--otherwise we'd have plain old run differential). The average margin of victory was 3.41 runs:

The White Sox were the model team, with their winning and losing margins both scoring direct hits on the league average. The Yankees had the highest run differential in wins (3.93), while Baltimore had the lowest (2.51). High losing margins congregated in the NL Central, as Pittsburgh, Milwaukee, Houston, and Chicago were all at or just below four and lost by .22 runs more than fifth-ranking Kansas City. In what is just a coincidence, Texas and San Francisco had the smallest average losing margins; the Rockies and Rays joined them in keeping losses under three runs/game.

Texas' average game was the closest in the majors (3.02 differential). Milwaukee was on the other extreme, with their 4.00 margin easily the highest in the majors. I would figure the medians, but a column filled with 3s wouldn't be very interesting.

I will now shift gears from margins of victory to counts of runs scored and allowed in games. For a manageable look at the data, I've split games into three classes. Low scoring games are those in which a team scores 0-2 runs; 30.6% of 2010 games fell into this class, and teams had a .144 W%. Medium scoring games are 3-5 runs, representing 38% of games and a .503 W%. High scoring games feature 6+ runs, occurring 31.4% of the time with teams winning at a .844 clip.

These classifications are nice because they are somewhat symmetric. Each category contains roughly 1/3 of games (that might be too strong, but it's the best one can do), and medium games are very close to a .500 W%, with low and high scoring games having roughly complementary W%s. Of course, it doesn't work out so neatly when the run environment is more extreme. 2010's 4.38 R/G average was pretty standard, but if you look at a season like 1996 (5.04 R/G), things don't work out as nicely: 24.3% low-scoring (.123 W%), 37.5% medium-scoring (.441), and 38.2% high-scoring (.798).

Caveats aside, here are the game type frequencies by team:

I'll let you peruse these tables for whatever tidbits you might find, because at this point it's no longer sporting to point and laugh at the Mariners, and it largely corresponds to what you already know about team offense. The same holds for the flip side--runs allowed broken down into low, medium, and high:

Of course, teams had a .856 W% in games in which they hold their opponents to a low score, .497 in medium games, and .156 in high-scoring games.

We could also look at teams records in games by scoring class. This chart shows team records in games grouped by runs scored:

To the extent that this data tells you anything, it tells you more about the opposite unit (defense in this case). It has a lot more use as trivia than analytical fodder. Detroit was 1-37 (.026) in games in which they scored 0-2 runs, while Philadelphia was 13-38 (.255) in such contests. Even when Seattle had a high-scoring game, they didn't clean up to the extent that other teams did (19-6, .760).

I found a few of the factoids in the reverse (record in games grouped by runs allowed) a little more interesting:

Boston (42-1, .977) and Baltimore (28-1, .966) were heads and shoulders above the rest of the teams when holding opponents to a low-scoring game--the next fewest number of losses was four, with the Yankees having the most wins with four losses. Even allowing a moderate number of runs spelled doom for the Mariners, as they were just 16-40 (.286) when allowing 3-5 runs; Pittsburgh's 22-38 looked good in comparison.

The Mariners' performance when allowing six or more runs was something else entirely: 1-53 (.019). What's odd is that Cincinnati, despite having a good offense, was the next worst team when allowing a lot of runs (1-31, .031). Philadelphia and Boston each managed to slug their way to a .304 W% in those games, meaning that Boston was the top-performing team both when their defense sparkled (42-1 in low scoring games) and when it was poor. It was a pedestrian record in the middle RA games (30-33) that held the Red Sox back.

I'll close with the most useful analytical tools in the article--what I call game Offensive W% (gOW%), game Defensive W% (gDW%), and game expected W% (gEW%). These metrics are based on a Bill James construct from the 1986 Abstract to evaluate teams based on their actual runs distribution. Instead of using the Pythagorean formula to estimate a team's W% assuming the other unit was average, he proposed multiplying the frequency of each runs scored level by the overall W% in those games. The resulting unit is the same as classic OW%, but it is based on the run distribution rather than the run average.

In order to figure gOW% (and the other metrics), one first needs an estimate for W% at each runs scored level. The empirical data for 2010:

The "marg" column shows the marginal benefit of each additional run; going from one to two runs increases W% by .122. Each run is incrementally more valuable through four, and then the benefit begins to decline, although this year's results have a blip in which the sixth run had a higher marginal impact than the fifth run.

The column marked "use" is the value I used to figure gOW% for each runs scored level. To smooth things out (crudely so, but nevertheless assuring that each additional run either left unchanged or increased the win estimate), I averaged the results for 9-12 runs per game. The "invuse" column is the complement of those values, which are used to figure gDW%. A more comprehensive explanation about how all of the W%s are calculated is included in the 2008 edition of this post.

For most teams, gOW% and OW% (that is, estimates of offensive strength based on run distribution and average runs/game respectively) are very similar. Teams gOW% higher than OW% distributed their runs more efficiently (at least to the extent that the methodology captures reality; there are other factors including park effects, non-uniform inning games, covariance between runs scored and allowed, and failure of the empirical estimates of W% for each runs scored value); the reverse is true for teams with gOW% lower than OW%. The teams that had differences of +/- 2 wins between the two metrics were:

Positive: SEA, HOU, BAL, CLE, OAK

Negative: PHI, COL

You'll note that the positive differences tended to belong to bad offenses; this is a natural result of the nature of the game, and is reflected in the marginal value of each run as discussed above. Runs scored in a game are not normally distributed because of the floor at zero runs, and once you get to seven runs you'll win 80% of the time with average pitching, so additional runs have much less win impact.

The teams for which gDW% was out of sync with DW% by two or more games:

Positive: CHN

Negative: SF, BAL, TEX, NYA

Both pennant winners had inefficient distributions of runs allowed, but it obviously didn't hurt them too much. San Francisco's gDW% of .572 was still good for second in the majors behind San Diego, which had a similarly inefficient runs allowed distribution (although not quite extreme enough to make the above list).

gOW% and gDW% can be combined with a little Pythagorean manipulation to create gEW%, which is a measure of expected team W% based on runs scored and allowed distributions (treating the two as if they are fully independent). The teams which had two game deviations between the two estimates:

Positive: HOU, CHN, SEA, KC, NYN

Negative: SF, PHI, NYA, TEX, COL, SD

This happened to break down pretty clearly into a good team/bad team dichotomy, but that is not always the case. gEW% had a lower RMSE in predicting actual team W% than did EW% (2.63 to 2.83), which is not as impressive of a feat as it might sound. Given that gEW% gets the added benefit of utilizing run distribution data rather than aggregate runs scored, a tendency to under-perform EW% would be indicative of a serious construction flaw. In any event, both RMSEs for 2010 were well below the usual error for a R/RA W% estimated, which is generally in the neighborhood of f our games, which tells us nothing but as a long-time user and constructor of W% estimators, it certainly caught my eye.

Here is the full chart for the game and standard W%s discussed above, sorted by gEW%:

Tuesday, January 11, 2011

Hitting by Position, 2010

Offensive performance by position (and the closely related topic of positional adjustments) has always interested me, and so each year I like to examine the most recent season's totals. I believe that offensive positional averages can be an important tool for approximating the defensive value of each position, but they certainly are not a magic bullet and need to include more than one year of data if they are to be utilized in that capacity. So the discussion that follows is not rigorous and focuses on 2010 only.

The first obvious thing to look at is the positional totals for 2010, with the data coming from Baseball-Reference.com. "MLB” is the overall total for MLB, which is not the same as the sum of all the positions here, as pinch-hitters and runners are not included in those. “POS” is the MLB totals minus the pitcher totals, yielding the composite performance by non-pitchers. “PADJ” is the position adjustment, which is the position RG divided by the position (non-pitcher) average. “LPADJ” is the long-term positional adjustment that I use, based on 1992-2001 data. The rows “79” and “3D” are the combined corner outfield and 1B/DH totals, respectively:

Most of the positions matched the default PADJ fairly closely in 2010, with the big exception being DH (and thus, the 1B/DH combination as well). DHs only created 4.76 runs per game versus a position player average of 4.65. While first basemen were 18% better than average, 1B/DH were a combined 13% above average, about equal with RF. RF has outperformed LF since 2007, by similar margins, but I wouldn't read anything into that. As recently as 2006 LF out hit RF 5.6 to 5.4, and the players are fairly interchangeable. Perhaps there has been a slight trend to put weak-hitting leadoff types in left (like Scott Podsednik or Juan Pierre), but the samples for single seasons are small enough that a couple unconventional team choices can create the narrow margin between the two corner spots.

Pitchers hit at just 5% of the position player average, which is as low as they've ever been, although not significantly different than the performances over the past few seasons. For the NL teams, pitchers' offensive performance was as follows (for this and all the subsequent team data, park adjustments are applied. Runs above average are figured against the overall 2010 major league average for each position--no distinction between AL and NL. Sacrifices are not included in any way which is not a big deal for any position other than pitcher--this is their performance when allowed to swing away (or when failing to get a bunt down for a sacrifice)):

After two years of Cubs pitchers leading the way, there was a new leader as Yovani Gallardo (.254/.329/.508 in 72 PA with 4 longballs) powered Milwaukee to the top of the heap. LA's -11 was the worst in the last three seasons, as Dodger pitchers' extra base contributions consisted of just two doubles.

Among AL teams, pitchers' PA ranged from 15 to 25, but only Boston managed to create more than one run per game (5.2 off a .306/.327/.368 line). After two consecutive years of failing to put a single pitcher on base, Toronto pitchers mustered a single, a double, and a walk. Two AL teams failed to have a pitcher reach base this season--Detroit and, naturally, Seattle. The nearly unfathomable offensive outage in the Pacific Northwest extended to the already ill-supported hurlers as well.

Next, let's look at the leading and trailing positions in terms of RAA. As mentioned above, all figures are park-adjusted but utilize the 2010 major league positional average, not the averages for each league. Additionally, left field and right field (but not first base and DH) are based on a combined average for the two positions.

I will not go through the formality of a chart for the leading positions, as the individuals responsible should be fairly obvious, and will rank highly on individual leader boards. It's a little different when dealing with batting order position, since even outstanding hitters are sometimes shuffled around in the lineup. Most top players stick to one position, though. The teams that led at each position were:

C--ATL, 1B--STL, 2B--NYA, 3B--BOS, SS--COL, LF--TEX, CF--TOR, RF--TOR, DH--MIN

Toronto's center fielders were a bit of a surprise for me, with Vernon Wells rebounding to lead the way. Pittsburgh and Andrew McCutchen were second on the list. CF had the smallest standard deviation of RG (.72); RF was close behind at .75. The largest standard deviation was at first base (1.5).

For the worst RAA at each position, the player listed is the one who played in the most games in that fielding position. It doesn't necessarily indicate that the player in question is personally responsible for such a dreadful showing:

Baltimore and Seattle each had multiple positions among the worst in the league; of course, it had to be Seattle that led with three. As an Indians fan, it is a shame to see the center fielders here; what made it worse was the Grady Sizemore contributed to the ineptitude when he was healthy enough to be in the lineup.

The following charts give the RAA at each position for each team, split up by division. The charts are sorted by the sum of RAA for the listed positions. As mentioned earlier, the league totals will not sum to zero since the overall ML average is being used and not the specific league average. Positions with negative RAA are in red; positions with +/- 20 RAA are bolded:

Philadelphia tied for the NL lead with six above average positions, although I can't for the life of me figure out how a position manned by the $25 million man couldn't muster any more than three RAA. Atlanta was the only NL team to boast three +20 positions. Florida led all of the majors with 70 RAA from middle infielders, which was enough to lift the entire infield to the top RAA in the NL despite below-average hitting from both corners.

Cincinnati tied with the Phillies in the NL with six above-average players, led in corner infield RAA, and led in overall positional RAA. St. Louis had the NL's lowest middle infield RAA, but the highest outfield RAA and the #1 single position in baseball (first base, naturally). You can see that almost all of their plus performance came from their three positions manned by star-types (yes Tony, Colby Rasmus), but that outside of them only right field was above average. Pittsburgh and Houston both had just one above-average position, but the Astros only managed a +2 out of right field while Andrew McCutchen carried Pirate center fielders to a +23. Thus, Houston trailed the NL and 28 other clubs with -103 positional RAA.

It's hard to find a division with a smaller range of offensive performance from position players, 33 runs from top to bottom. San Francisco was the only team in the NL that didn't have a +/- 20 RAA position. San Diego had the worst offensive outfield in MLB, but that -37 was offset by first base alone, and the rest of the positions were just a bit below average.

New York was the only team above average at all positions, but only their second basemen were real standouts. It was still enough to lead in total positional RAA, although Cincinnati had a higher average given the DH. Toronto tied for the AL lead with three +20 positions. Baltimore was one of two teams to have four -20 positions, had the worst single position in MLB (first base), and had the lowest middle infield RAA in MLB.

Minnesota tied for the AL lead with three +20 positions. Kansas City was the only AL team without a +/- 20 RAA position. Without Shin-Soo Choo and right field, Cleveland would have been in big trouble. It's worth noting, though, that Indian DHs were pretty much average. While Travis Hafner's contract has inarguably been a huge impediment to the organization, Hafner the ballplayer is not killing the team on the field, and that's a distinction that fans are having trouble making.

Texas led the majors in outfield RAA despite a well-below average contribution from their centerfielders. The rest of the team offered little notable offense; the rest of the division even less. Seattle, as you can imagine, led in a lot of negatives: fewest above average positions in the AL, most -20 positions in the majors, lowest corner infield RAA, lowest middle infield RAA, lowest infield RAA. The five -20 positions all ranked in the bottom seventeen positions in all of MLB. Let me write that gain: of the seventeen worst offensive positions in the majors, five of them belonged to Seattle.

Here is a link to a spreadsheet with each team's performance by position.

Monday, January 03, 2011

Crude NFL Ratings

I feel bad about starting 2011 with a post about the third-best professional sport, but the material is time-sensitive. I came up with a crude rating system for baseball teams last year, and it is equally applicable to teams from other sports. So I've been tracking NFL ratings throughout the season, and since the playoffs are about to start I figured that I would share them here.

(*) "came up" is a big stretch, actually, since while I'm not aware of anyone doing it in exactly the manner I did, that's mostly because the way I did it is not the best way to do it and because a lot of similarly-designed systems have worked with point differentials rather than estimated win ratios. "Implemented" would be a more appropriate and honest description.

I do not have a full write-up ready for the system yet; it's actually fairly simple (part of the reason why I'm only confident describing the results as "crude"), but I am going to do a full explanation that tries to express every thing in incomprehensible math formulas rather than words. It's backwards to post results before methodology, but results of an NFL ranking system aren't very interesting after the season unless you are a huge NFL fan, and I am not.

The gist of the system is that you start by calculating each team's estimated win ratio, based on points/points allowed ratio (I used this formula from Zach Fein for the NFL). Then, you figure the average win ratio of their opponents and the average win ratio for all 32 teams in the NFL. To adjust for strength of schedule, you take (team's win ratio)*(opponents' win ratio)/(average win ratio). Now you have a new set of ratings for each team, and so you repeat the process, and you keep repeating until the values stabilize.

At that point, I take each team's adjusted win ratio/average win ratio and multiply by 100. This is the Crude Team Rating. Similarly, I figure the strength of schedule for each team. The nice thing about these ratings is that they are Log5-ready. If a team with a rating of 140 plays a team with a rating of 80 on a neutral field, they can be expected to win 140/(140 + 80) = 63.6% of the time.

Since the system is crude, there are a number of things it doesn't account for: the field on which the game is actually played, the effect that a team has on its opponents' estimated win ratio (losing 31-7 reduces your expected win ratio, but it also makes your opponent look like a stronger team), any changes in team composition due to injuries and the like, regression, and this is by no means a comprehensive list.

The ratings are based solely on aggregate points and points allowed. Even if you restrict the inputs to aggregate season data, there are a number of possible other inputs that you could use--actual win/loss record, predicted win loss record based on total yards, turnovers, and other inputs (akin to using Runs Created rather than actual runs scored for a baseball rating), or some combination thereof. I have not done that here (I'm not sufficiently motivated when it comes to NFL ratings, and I will offer a set of ratings based only on W/L with a slight adjustment), but have done some of that for MLB ratings.

To account for home field in making game predictions, I've assumed that the home field advantage is a flat multiplier to win ratio. Since the average NFL home-field record is a round .570 (a 1.326 win ratio), the expected win ratio of the matchup is multiplied by 1.326 (or divided if it is the road team). For example, in the 140 v. 80 matchup, the expected win ratio is 140/80 = 1.75. If the 140 team is at home, this becomes 140/80*1.326 = 2.321, and if they are on the road it becomes 140/80/1.326 = 1.320. So the expected winning percentage for the 140 team is 1.75/2.75 = 63.6% on a neutral field, 2.321/3.321 = 69.9% on their home field, and 1.320/2.320 = 56.9% on the opponents' field.

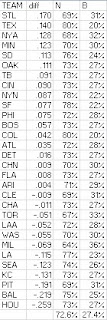

In this table with the 2010 rankings, aW% is the estimated "true" winning percentage for the team against a league-average schedule; "SOS" is strength of schedule; and "s rk" is the team's SOS rank.

The next chart gives ratings for each division, which is simply the average CTR of the four teams that comprise the division:

The NFC West was truly a dreadful division, with an average CTR of just 29. If you treat the division ratings as team ratings, that implies that the W% for a NFC West team against an average NFL team should have been 22.5%. The NFC West teams combined for an actual record of 25-39, which is 13-27 (32.5%) when you remove intra-divisional games. Of course, thanks to the unbalanced schedule, their average opponent is not an average NFL team.

With the playoff picture now being locked in, one can use the ratings to estimate the probability of the various playoff outcomes. I offer these as very crude probabilities based on crude ratings, and as nothing more serious. For these playoff probabilities, I assumed that each team's effective winning percentage should be 3/4 of its actual rating plus 1/4 of .500, and converted this to a win ratio. I have no idea if this is a proper amount of regression or not; I would guess it's probably not aggressive enough in drawing teams towards the center, but I really don't know. The key word is "crude", remember. That results in the following rankings for the playoff teams (this won't change the order in which they rank from the original CTR, but it will reduce the magnitude of the differences):

This chart illustrates why I don't like the NFL playoff seeding system, although this year is worse than most. In both conferences, the wildcard teams are estimated to be better than the lesser two division winners. In the case of Seattle this is completely uncontroversial, but Indianapolis was not a particularly impressive team and Kansas City played the estimated weakest schedule in the NFL. When your divisions are as small as four teams, a crazy year like this is bound to happen eventually. At the very least, I would suggest that the NFL allow wildcard teams to host playoff games if they have a better record than the division winner they are slated to play. When an 11-5 team like the Saints to have to go on the road to play the 7-9 Seahawks, I suggest that your playoff structure is too deferential to your micro-divisions.

With those ratings, we can walk through each weekend of the playoffs. P(H) is the probability that the home team wins; P(A) is the probability that the away team wins. For later rounds, P is the probability that the matchup occurs (except for the divisional round, in which case P is the probability that the designated set of two matchups occurs):

According to the ratings, the road teams should be favored in each game this weekend. They suggest there's about a 16% chance that they all win, but only a 2% chance that all of the home team wins.

It's necessary to look at the possible division matchups together by conference thanks to reseeding. The most likely scenarios result in teams from just four of the eight divisions making it to the round of eight, with the AFC featuring divisional rematches (allowing the "Divisional" round to truly live up to its name) but a inter-divisional matchup in the NFC.

Once you get to the championship game level there are so many possibilities that there isn't much to say, so I'll move right on to the Super Bowl:

There are two potential Super Bowl matchups that come out at .1%; the least likely is considered to be Kansas City/Seattle (.013%, or about 7700-1).

Combining those tables, one can look at the advancement odds for each team:

Again, I need to issue the standard disclaimer--these are very crude odds based on a crude rating system.

Finally, here are ratings based on the actual W-L record rather than points/points allowed. I did cheat and add a half a win and half a loss to each team; as the Patriots and Lions have shown us in recent years, 16-0 and 0-16 are not impossible in the NFL, and either would break a ratio-based ranking system: