Let’s suppose that you were going to conjure up a model that fit the distribution of team runs per game. What properties would you like it to exhibit (ignoring for the moment whether a model with such properties is actually feasible)?

I would suggest the following:

1. It should be discrete. Runs scored in a game is a discrete quantity. There’s no such thing as a fractional number of runs scored. There’s nothing wrong with suggesting that a certain combination of events will generally create, say, 4.35 runs, but that 4.35 runs represents a weighted average of all the times that combination produces 0, 1, 2, 3, 4, 5, …, runs. The distribution function should reflect those probabilities.

2. It should be a standard, well-known probability distribution.

3. It should be accurate, and adaptable across a wide range of scoring contexts. This is the most important, but also should go without saying.

4. If possible, it should be simple. I am not suggesting trading accuracy or theoretical purity for simplicity, but this is a wish list.

Again, that is a wish list. In the real world, you have to model reality. That might mean accepting a continuous distribution as a model of a discrete function, or having a method that is useful in normal contexts but has some structural deficiencies that limits its utility at the extremes.

If we want to find a distribution that meets some of the criteria on the list, we have to start with discrete distributions. I would assume that the first such distribution that comes to mind for most people with any background in probability or statistics is the Poisson distribution. The Poisson distribution is simple, adaptable, and has a cool name and a cool Greek letter for a parameter. Unfortunately, it doesn’t work as a model of runs scored in baseball, despite some noble efforts to make it so.

Poisson does work fairly well (at least to the extent of my knowledge, which is limited) as a model of soccer and hockey scoring. Given that it is often used for situations in which events are expected to occur over a certain period of time (or area and other physical intervals), it makes intuitive sense that it wouldn’t fit baseball run scoring, since time is not a factor. A Poisson process should be able to be extended to time periods other than the basis--that is, if your mean is 3 goals per game for hockey, you should be able to use the distribution to estimate goals per period (with mean 1) or goals per ten games (with mean 30), or goals per 3714 games (with mean 11142).

This property obviously does not carry over to baseball due to the nature of the game. One could picture it working for converting between innings and games, but once you get to the inning level and start splitting into outs, the pattern of runs scored is not the same independent of the number of outs in the inning (i.e. more runs are scored with two out than zero out).

As you can see in the chart in the Clay Davenport article I linked above, the Poisson distribution severely underestimates the proportion of shutouts. This is an issue that can be sidestepped by using a modified value at zero, but the slope at non-zero runs is not close enough to reality to salvage a Poisson approach, and the relationship of the mean of runs scored in relation to the variance is not a match for Poisson.

In lieu of a simple distribution, those examining baseball scoring have had to be creative. The three-parameter Weibull distribution (a continuous distribution) has been used with good results by Dr. Steven Miller and Sal Baxamusa to model runs scored. The Tango Distribution, which models runs scored per inning, can be used to estimate the per game distribution with the use of some combination math published by Ben Vollmayr-Lee (zip link) (as an aside, one of the topics in statistics that confuses me the most is combinations and permutations. Sure, I understand why 9! gives you the number of possible batting orders for nine players, and I understand the binomial coefficient, but start asking more detailed questions about pulling colored balls out of urns with and without replacement and I started to wish that I was studying liberal arts.)

With the recent publication of Bill James’ old Baseball Analyst newsletters, I was able to read Dallas Adams’ article in the June 1982 issue in which he uses the following functions to estimate scoring distribution as a function of R/G:

When N is 5 runs or fewer: P(N) = A + B*N + C^2*N

where A = .38498 - .10839 (R/G) + .0080055(R/G)^2

B = .0010306 + .024139 (R/G) - .002943(R/G)^2

C = -.01879 + .002514(R/G) - .00001506(R/G)^2

When N is 6 runs or more: P(N) = D(E^N)

where D = 6.6210 - 2.4965(R/G) + .27518(R/G)^2

E = .058479 + .24022(R/G) - .022291(R/G)^2

That’s the kind of thing I’d like to avoid if at all possible, no offense to Mr. Adams and the others who have made the effort to work on this problem.

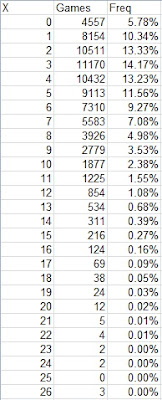

Let me close out this post by offering a quick look at the Adams formula and the failures of Poisson, while introducing a set of data that I’ll use to fit a model in the next installment. I have a spreadsheet (I believe it was put together by Clay Davenport or Keith Woolner, but I can’t find the original link) with the runs per game for each major league team 1981-96. The aggregate distribution for these teams was:

The easiest way to fit a Poisson model to this data is to use the maximum likelihood estimate that the parameter h is equal to the sample mean (this is also equivalent to the method of moments estimate in this case). In this case the mean R/G is 4.456 and the variance is 9.36 (those of you familiar with Poisson can already see this is not going to end well as a Poisson distribution has a mean equal to its variance).

The probability mass function for Poisson is:

P(x) = e^(-h)*h^x/x!

For example, the estimated probability of scoring 3 runs is e^(-4.456)*4.456^3/3! = .1712. The empirical probability in this case is ,1417, and this is one of the more accurate points:

As you can see, the Poisson model bunches too much probability around the mean, leaving estimates of high scoring games too low and estimates of low scoring games way too low.

I will also look at Adams’ formula just for kicks. The constants for 4.456 R/G are A = .0610, B = .0502, C = -.0079, D = .9606, E = .6863, yielding these estimated probabilities:

Adams better matches the actual distribution for normal amounts of runs scored, but overestimates the likelihood of extremely high run output. Since he uses formulas that don’t tie to a true probability distribution which adheres to the requirements thereof (most importantly that the sum of all probabilities is one), the estimated probabilities total to 1.03 and runs per game to 4.61.

My point is not to pick on a thirty-year old formula from a pioneering sabermetrician--the fact that luminaries such as Adams and Davenport have not been able to come up with a satisfying approach to this problem serves to illustrate its difficulty, and hopefully to cause you not to judge too harshly the method that I offer in the next post as flawed but useful in certain circumstances.

Before closing this post, let me provide a crude measure of the relative accuracy of the models. Basically, it is the chi-square test statistic. For each number of runs scored (0, 1, 2, etc.), calculate (Expected games with n runs - actual games with n runs)/Expected^2. Here, I will group all games of 15+ runs together, which could be a problem if we encounter a distribution that overpredicts extremely high scores.

I will be doing this test (which I’ll call X^2) without any of the trappings of an actual chi-square test: degrees of freedom, critical values, and the like. The point is not to perform a real significance test--it's to get a quick idea of how these models stack up against one another. I will at various points in this series be testing the model with the same data that was used to fit its parameters--obviously a no-no if you want an unbiased test. However, the first basic test for a model is whether or not it can describe the data it was fitted with. If it can’t do that, there’s absolutely no point in worrying about how it handle out of sample data. None of the models I’m going to be offering up in this series are clearly good enough to move on to that next step.

So, for the Poisson, Adams, and modified Adams (I rescaled so that the total probability would be equal to one) distributions, here are the X^2 values for the model versus the 1981-96 data:

Poisson 91996

Adams 371

Modified Adams 305

Poisson with no fudging obviously doesn’t match runs scored, but is provided here so that you can see how a truly worthless model fares.

Monday, June 04, 2012

On Run Distributions, pt.1: Introduction

Subscribe to:

Post Comments (Atom)

No comments:

Post a Comment

I reserve the right to reject any comment for any reason.